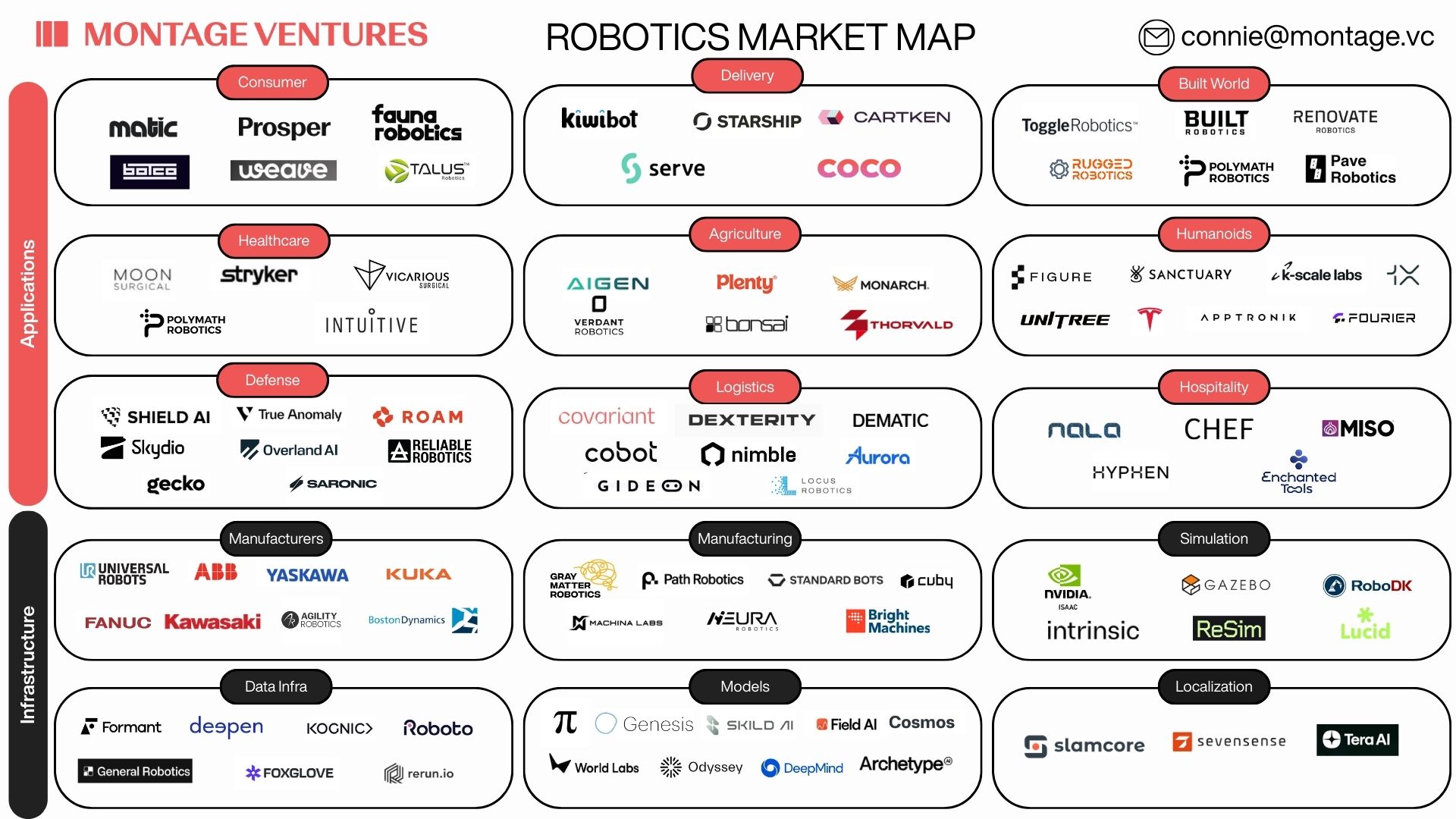

Physical AI: An RFP for Robotic Applications and Foundational Infrastructure

$11B

Global Embodied AI Market by 2034

7/10

US Employers unable to find suitable employees for job vacancies

15%

Increase in US labor productivity from AI

The Dawn of Physical AI: An RFP for Robotic Applications and Foundational Infrastructure

The Evolution of Robotics

Robotics and physical AI are undergoing a profound transformation, shifting from purpose-built machines to adaptable systems capable of generalizing across diverse tasks and environments.

In the 1960s-1980s, the first industrial robots were developed, primarily for repetitive tasks in manufacturing. Robotic arms became common on assembly lines, improving efficiency and safety in manufacturing. Early sensors, like force and torque sensors, emerged in the 1970s. Robots were characterized by the rise of industrial robots and the envisioning of consumer robotics as household helpers that failed to materialize.

In the early 2000s, industrial robots became more versatile, integrating advanced sensors and control systems for greater precision and adaptability. Mobile robots and autonomous systems started to appear in logistics, healthcare, and other sectors. The introduction of home service robots, like the Roomba, popularized robotics in daily life. These robots required significant R&D costs and relied on sensors and algorithms to navigate pre-defined environments. Such deterministic approaches have scalability challenges in making fully autonomous systems.

In the 2020s, humanoid robots like Boston Dynamics’ Atlas and Tesla’s Optimus demonstrate advanced mobility and task versatility, while autonomous assistants are becoming more integrated into everyday life. Advances in AI, VLAs, machine learning, and sensor technology are enabling new perception, interaction, and adaptation in unseen environments.

As we look at the impact robotics and physical AI will have on the future, we’re excited about the growth of new opportunities. The global professional services robot is currently estimated at $38B, driven by sectors like healthcare, logistics/transportation, and defense/security. As automation begins to take shape in embodied forms, robots that can think, learn, reason, and interact with their environments can perform certain tasks better than humans. With labor representing ~50% of the global GDP, robots entering into the workforce represents one of the most compelling opportunities.

A Confluence of Technological Tailwinds

There have been multiple technological tailwinds to enable a “why now” era in embodied AI. Below are a few categories of development and why we’re so excited about emerging applications that leverage these technologies:

Emergence of Robot Foundation Models

Robotic models need to be able to 1) adapt to different situations, 2) understand and respond to instructions or changes in their environment, and 3) be dextrous in manipulating objects. Over the past few years, off-the-shelf Vision Language Models (VLMs) and Large Language Models (LLMs) have made for extremely strong perception and reasoning systems. By training on an entire internet’s worth of visual-language data, these foundation models have distilled visual and semantic world understanding to help in robotic sensing and planning, especially in unstructured and open-world situations. New Vision-Language-Action (VLA) models unifies multimodal inputs across vision and text into actions. These models can understand an image, process language, and perform real-world actions. Its Vision interprets visual data from cameras or sensors, Language processes natural language instructions, and Action generates physical cations or motor commands.

All of these recent LLM, VLM, and VLA models have advanced the robotics field. Even training robotics action data in conjunction with internet data, outside of physical interactions, can help robots understand semantic and visual knowledge, understanding symbols, reasoning, and many more human-like recognition outside of robotic action data. Even adding data across different types of robots with their own unique bodies, sensors, actuators, and control systems (i.e. robotic arms, quadruped, drones) can improve performance on target robots.

Some recent notable advancements robotic foundation models include:

- RT-2: Google Deepmind’s Robotics Transformer 2, released in July 2023, is a Vision-Language-Action (VLA) model that uses web-scale vision and language data to improve robotic control. The model can learn from images and text from the internet to understand and execute instructions, and then use that knowledge to control robots.

- Mobile ALOHA: In January 2024, Researchers at Google DeepMind and Stanford University announced a low cost, open sourced hardware system for manipulating tasks through a whole body teleoperation system for data collection. With only 50 human demonstrations needed to learn each task, Mobile ALOHA was able to achieve a 90% success rate.

- RFM-1: Covariant released in March 2024 an 8 billion parameter transformer trained on text, images, videos, robot actions, and a range of numerical sensor readings. RFM-1 is trained on a massive multimodal dataset from robots operating in warehouses around the world. Using this data, RFM-1 predicts how robots will manipulate objects and interact with their surroundings.

- Gemini Robotics-ER 2.0: In March 2025, Google launched its advanced vision-language-action model tailored for robotics applications and can understand new situations.

- Π0: Physical Intelligence released their general-purpose robotic foundation model that can be fine-tuned to a diverse range of tasks (folding laundry, cleaning a table, scooping coffee beans, etc) for a variety of different robot type (robots with two arms, single-arm platforms, mobile robots, etc). Its first version of the model was released in October 2024, and in April 2025 they released π-0.5 with significant advancements in generalization.

- Isaac GR00T: Launched by NVIDIA in March 2025, GR00T N1 is an open and fully customizable foundation model for humanoid reasoning and skills, trained on real and synthetic datasets for developers. GR00T N1 can easily generalize across common tasks, such as grasping, moving objects with one or both arms, and transferring items from one arm to another, or perform multi-step tasks that require long context and combinations of general skills.

These new breakthroughs and foundation models are helping robots generalize action across new scenarios, solving a variety of tasks out of the box including tasks never seen before in training. These models can also understand natural language as an input and direction. By combining reasoning and spatial understanding, robots can instantiate entirely new capabilities in real time. These models are breakthroughs that allow robots to move from narrowly predetermined to generalized applications, pushing the industry forward.

Simulation and Reinforcement Learning

In January this year, NVIDIA released Cosmos, a generative model that can create virtual simulations of real world scenarios for robot training. Cosmos world foundation models, or WFMs, offer developers an easy way to generate massive amounts of photoreal, physics-based synthetic data to train and evaluate their existing models. Developers use Cosmos for video search, synthetic data generation, model development and evaluation.

Robotics foundation models require vast, diverse, and multimodal datasets to generalize across tasks, environments, and embodiments. Robotic simulation is built upon the fundamental laws of physics, including the conservation of mass and momentum, body dynamics, contact and friction, and actuator modeling. These principles are used to predict how robots will behave in various scenarios and environments.

WFMs can address this by generating high-quality, photorealistic, and physics-accurate synthetic data by simulating real-world environments, object interactions, and dynamic scenarios. This synthetic data is particularly crucial for situations where collecting diverse and annotated real-world robot data is costly, risky, or impractical. Some examples include simulation for robots to practice navigation and manipulation tasks; generating labeled video and sensor data under varied weather or lighting conditions; covering rare or dangerous scenarios. Policy development and evaluation through virtual environments can help close the gap between simulation to real and reduce real world trial/error.

In addition, new techniques in reinforcement learning are helping reduce the need for extensive labeled datasets by enabling robots to learn directly from their interactions with the environment.

Roboticists can now leverage more sophisticated world models, updating simulations of their environments to forecast future states, plan actions, and adapt in real time. These models leverage deep learning to integrate spatial and temporal data, enabling robots to reroute, recalibrate tasks, and learn from new experiences on the fly.

Increasing Spatial Intelligence

Spatial intelligence in robotics enhances a robot's ability to perceive, understand and interact with the world in three dimensions, leading to more efficient navigation, object recognition, and task completion. This capability, which links perception with action, enables humans and animals to predict and respond to their environment. This includes the ability to map its surroundings, interpret sensory data (like camera images and sensor readings), and make decisions based on spatial relationships.

Recent advancements technologies like Neural Radiance Fields (NeRF) enable robots to generate real-time 3D models of their surroundings, improving spatial awareness and interaction. NeRFs provide a continuous, implicit representation of the scene, allowing robots to predict how objects will look from new viewpoints and complete 3D reconstructions. This improves navigation, manipulation, and overall scene understanding.

Improving Multisensor Approach

A multi-sensor approach remains essential for developing robust and adaptive robotic systems. While most models prioritize vision, integrating additional sensor modalities (proximity, tactile, torque, motion, temperate) can significantly improve overall system performance and accuracy, enabling robots to operate more effectively in complex environments.

Recent breakthroughs include finger-shaped tactile sensors inspired by human fingertips, capable of detecting both the direction and magnitude of forces. These tactile sensors can also accurately identify materials among a wide range, measure temperature, texture, slippage, and other key factors.

For instance, in industrial automation, a robotic arm equipped with tactile, torque, and vision systems can pick up objects with optimal force, detect slippage, and adjust its grasp dynamically. When handling fragile materials such as glass or electronics, tactile feedback helps prevent breakage. Additionally, proximity sensors enhance safety by detecting nearby human workers and triggering the robot to slow down or stop movement to prevent accidents. By integrating multiple inputs, robots can compensate for individual sensor limitations.

Rethinking the Supply Chain

The robotics and automation supply chain remains highly globalized, with critical components (motors, actuators, semiconductors) still predominantly sourced from China, Taiwan, Japan, and South Korea. Geopolitical tensions, trade wars, and tariff increases (notably between the US and China) are prompting companies to reconsider their manufacturing and sourcing and manufacturing strategy.

Reinforcement learning and software advancements shift much of the development and optimization process away from costly, custom physical hardware to flexible, efficient software and simulation environments, reducing the need for high-end custom components in the final deployed system. As more companies offer pre-made robot cells and standardized robotic systems, it also becomes easier to find off-the-shelf solutions that meet specific needs. While the debate remains on being fully vertically integrated and owning the hardware versus not, we expect to see companies leverage both approaches.

Opportunities We’re Excited About

New Applications in Pressing Industries

The most pressing labor shortages include industries such as manufacturing, construction, shipping and logistics, warehousing, and retail. These industries face headwinds including disruptions in supply chain, an aging population, and worker retention challenges. For example, a staggering 49% of warehouse workers turnover in a year. These jobs are physically demanding, high stress, and lack growth opportunities with low pay, while companies face high expenses as a result of turnover.

Below are a few example categories that could benefit from robotic workers, where the tasks these robots complete will be more structured with less variability. As the industry evolves, we expect to see robots make movement into more tightly regulated industries where the output of the action has significant impact, such as healthcare or consumer homes.

- Supply Chain and Logistics: Factories need automation for assembling, packing, moving and shipping. Companies like Covariant deploys AI-powered robots for various warehouse automation tasks, particularly in the realm of goods-to-person picking, kitting, and induction. They’ve built their own foundation model, RFM-1, which provides human-like reasoning and is trained on real world warehouse manipulation data. Furthermore, robotics is revolutionizing logistics through areas in unloading containers/pallets and order fulfillment. Agility Robotics has created a robot called Digit which can load and unload boxes and pallets to help fill the labour gap in logistics and manufacturing.

- Manufacturing: In the past, robots lacked the level of dexterity needed in manufacturing. But with technologies such as the multi-axis arm movement, robots can better mimic manufacturing work. They can also be quickly reconfigured to support today’s shorter product life cycles. Tesla’s Optimus, for example, is piloting with its Fremont production line for assembly, material handling, and inventory management.

- Agriculture: Robotics can automate tasks, such as planting and harvesting, with greater speed and precision, leading to increased efficiency and productivity. Precision farming techniques enabled by robotics can optimize resource use, reducing waste and environmental impact. Additionally, robots can monitor crop and livestock health, enabling proactive interventions.

- Construction: Robots automate repetitive and time-consuming tasks, significantly reducing project timelines. For example, robotic layout systems like Dusty Robotics can mark floor plans in hours instead of days, companies like Renovate Robotics are working on installing roofing shingles faster in an industry with contractor shortages.

- Defense/Government: Autonomous and robotic systems can perform dangerous tasks such as remote operation of heavy machinery. Unmanned aerial vehicles help with defense in harsh terrains. Companies like Overland are building autonomous vehicles for navigating off road terrain in dangerous areas, surveillance, and resupply missions.

- Retail/Hospitality: In retail, robots can assist with tedious inventory management, restocking, and fulfillment. In the hospitality sector, robots are being explored for customer service such as front desk and housekeeping.

- Home Automation: Robots can automate tasks, freeing up time for other activities and providing a more convenient lifestyle. Some recent examples include household chores like folding laundry (Physical Intelligence), cleaning floors (Matic), and even cooking.

- Healthcare: Robotic applications in healthcare span a wide range, from surgical applications, new mobility ,products, patient support/interaction, elderly care and companions, patient monitoring, and physical/logistical. Companies like Intuitive Surgical are known for their da Vinci surgical system, which is a robotic system that allows surgeons to perform complex procedures with greater precision and control. New companies in the space will need to help with training on robotic systems for adoption and the evolving questions around liability.

More Datasets for Robotic Applications

Unlike LLMs, it’s still unclear how the dearth of robotic datasets needs to scale relative to performance. VLMs are powerful building blocks which work well with text, but there are many robotics and physics concepts outside of language. Many robots require real-world training data, which is far more difficult to collect than static vision-language datasets. While some startups and researchers are exploring data-free Reinforcement Learning (leverages pre-trained models, simulated environments) to reduce dependence on large-scale datasets, these techniques are still in their early stages.

There is a massive data gap between what robotic models have versus large vision language models. However, even outside of just quantity, the quality, distribution, diversity, and coverage of robotics data matters significantly. As Figure AI recently noted: “data quality and consistency matter much more than data quantity, as a model trained with curated, high quality demonstrations achieves 40% better throughput, despite being trained with ⅓ less data”.

Real world datasets such as the X-Embodiment Dataset, Robotics Surgical Maneuvers dataset for surgical tasks, and other industry verticals are critical for new applications. More teleoperation, in the wild data collections, and open source collaboration projects will aid the industry forward.

In the interim, we expect remote teleoperation to generate high-quality, task-specific data that robotics urgently need for these new use cases. With many robotics companies today, teleoperation comprises a high percentage of data collection and means of achieving tasks. Overtime, we expect the ratio of teleoperation to autonomy to reduce, with one teleoperator overseeing a greater scale of robots in the coming years.

Scalable Evaluations

Even alongside the pressing need for actionable data, an understanding of how various data impacts performance and evaluating it is still evolving. Scalable evaluation and benchmarking is needed for performance (measured by metrics like speed, efficiency, precision), reliability, safety, across a range of tasks and embodiments. Evaluating policies can be done in simulation, but suffers from lack of correlation to performance. Detailed tracking and evaluation frameworks in a scalable manner are needed at the infra level.

An RFP for Founders

At Montage, we're gearing up for fund 4 and looking for founders to partner with in this new era. Whether you're developing the next-generation hardware, intelligent automation systems, or an entirely new area, we’d love to hear from you and turn your vision into a reality. If you're working on something in the space or enjoyed this thesis, please reach out to connie@montageventures.com.